文章目录

这些知识的学习来源于网课,网课连接: https://www.youtube.com/playlist?list=PLXO45tsB95cIuXEgV-mvYWRd_hVC43Akk

背景知识

爬虫需要一部分网页相关知识,可以在这里充电:HTML, JS

简单爬虫示例

思路描述:打开网页之后,根据正则表达式选择待查找的内容。然后将结果输出。

# -*- coding: utf-8 -*-

“””

@author: kingdeguo

“””

from urllib.request import urlopen

# 导入正则表达库

import re

# 使用库函数爬取整个页面

html = urlopen(

“https://blog.csdn.net/weixin_44895666”

).read().decode(“utf-8”)

# print(html)

# 接下来可以使用正则表达式选择需要的信息进行爬取

title = re.findall(r”<title>(.+?)</title>”, html)

print(“\n Page title is :”, title[0]) 使用BeautifulSoup

但是这样的方式有些繁琐,推荐使用BeautifulSoup模块。BeautifulSoup用来简化匹配过程。

注意需要安装BeautifulSoup和lxml

pip install BeautifulSoup4

pip install lxml

也可以不安装lxml使用Python内置的解析器html.parser

其他部分解释见注释

爬取网页标题示例

# -*- coding: utf-8 -*-

“””

@author: kingdeguo

“””

from bs4 import BeautifulSoup

from urllib.request import urlopen

html = urlopen(“https://blog.csdn.net/weixin_44895666”).read().decode(“utf-8”)

# print(html)

# 首先需要把整个网页中的内容给BeautifulSoup

# 然后选择解析器,这里选择的解析器是lxml

# soup = BeautifulSoup(html, “html.parser”)

soup = BeautifulSoup(html, “lxml”)

# 对于想要输出的内容,按照 .tagname 的方式即可

# 可以多级访问

print(soup.head.title)

爬取网页中所有超链接示例

下面的示例尝试找出网页中的所有超链接

# 找出所有的超链接

all_href = soup.find_all(‘a’)

# 注意此时打印出来的不是超链接,而是字典。

# print(all_href)

# 需要把标签去掉,相当于根据key获取value

try:

for l in all_href:

print(l[‘href’])

except:

print(“mismatch”)

爬取CSS中class内容

因为class往往都是存放了相关的信息,所以对该部分进行爬取会比较高效。

# -*- coding: utf-8 -*-

“””

@author: Kingdeguo

“””

from bs4 import BeautifulSoup

from urllib.request import urlopen

html = urlopen(“https://blog.csdn.net/weixin_44895666”).read().decode(“utf-8”)

soup = BeautifulSoup(html, “lxml”)

# 选择class属性

all_info = soup.find_all(‘div’, {‘class’:’title-box’})

print(all_info)

使用正则表达爬取所有JPG图片相关连接

from bs4 import BeautifulSoup

from urllib.request import urlopen

import re

# 使用库函数爬取整个页面

html = urlopen(

“https://blog.csdn.net/weixin_44895666”

).read().decode(“utf-8”)

# print(html)

soup = BeautifulSoup(html, “lxml”)

img_links = soup.find_all(“img”, {“src”:re.compile(‘.*?\.jpg’)});

for link in img_links:

print(link[‘src’])

爬取百度百科

首先打开一个网页,然后在这个网页中寻找连接,然后从找到的子链接中随便选一个继续爬取。

from bs4 import BeautifulSoup

from urllib.request import urlopen

import re

import random

base_url = “https://baike.baidu.com”

his = [“/item/%E8%AE%A1%E7%AE%97%E6%9C%BA”]

for i in range(10):

try:

url = base_url + his[-1]

html = urlopen(url).read().decode(“utf-8”)

soup = BeautifulSoup(html, “lxml”)

print(i, ” “, soup.find(‘h1’).get_text(), ‘ url: ‘, his[-1])

sub_urls = soup.find_all(“a”,{“target”:”_blank”, “href”:re.compile(“/item/(%.{2})+$”)})

if len(sub_urls) != 0:

his.append(random.sample(sub_urls, 1)[0][‘href’])

else:

his.pop()

except:

print(“Can’t open!”)

使用requests

# 打印出来URL

import requests

# 下面的字典中存放的是参数

param = {“q”:”kingdeguo”}

r = requests.get(“https://www.google.com/search”, params=param)

print(r.url)

# 将会输出 https://www.google.com/search?q=kingdeguo

如果导入webbrowser模块,则可以直接使用代码用电脑默认浏览器打开这个网址。

import requests

import webbrowser

param = {“q”:”kingdeguo”}

r = requests.get(“https://www.google.com/search”, params=param)

print(r.url)

webbrowser.open(r.url)

接下来学习使用requests进行登录。因为有些网站,比如知乎,在登录前和登录后的显示是不一样的,这样可能会影响到之后的爬取。

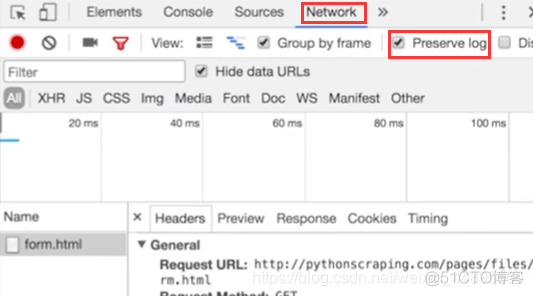

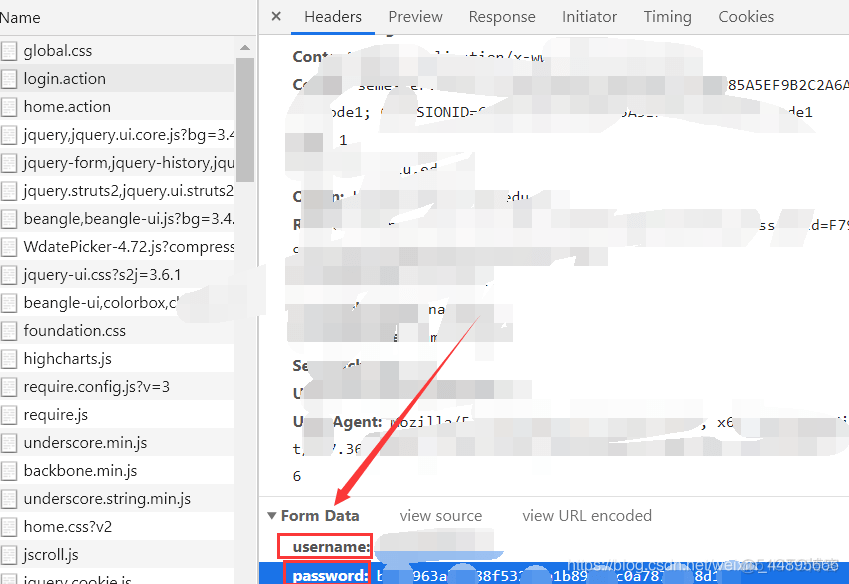

这里可以找到POST的表单数据的属性名称。然后按照下面的格式书写即可。

import requests

data = {“username”:”username”, “password”:”password”}

r = requests.post(“https://***”, data = data)

print(r.text)

通拓POST一张本地图片来使用百度识图功能。

import requests

import webbrowser

file = {“sign”:open(“./1.jpg”, “rb”)}

r = requests.post(

“https://graph.baidu.com/s”,

files = file

)

print(r.text)

使用Cookie

import requests

payload = {“uid”:””, “upw”:””}

r = requests.post(“https://***”, data = payload)

print(r.cookies.get_dict())

r = requests.get(“https://***”, cookies = r.cookies)

print(r.text)

使用session

import requests

session = requests.Session()

payload = {“uid”:””, “upw”:””}

r = session.post(“https://***”, data = payload)

print(r.cookies.get_dict())

# 这里的session已经包含了cookie的信息

r = session.get(“https://***”, data = payload)

print(r.text)

下载文件

import os

os.makedirs(‘./img/’, exist_ok=True)

IMAGE_URL = ‘https://avatar.csdnimg.cn/C/8/C/3_weixin_44895666_1568427141.jpg’

# 方式1

from urllib.request import urlretrieve

urlretrieve(IMAGE_URL, ‘./img/image.jpg’)

# 方式2

import requests

r = requests.get(IMAGE_URL)

with open(‘./img/image2.jpg’,’wb’) as f:

f.write(r.content)

# 方式3

# 适合比较大的文件,比如电影等

r = requests.get(IMAGE_URL, stream=True)

with open(‘./img/image3.jpg’,’wb’) as f:

for chunk in r.iter_content(chunk_size=32):

f.write(chunk) 多线程分布式

如果不适用多线程来处理,一般是按照如下的顺序方式来处理,通过循环来进行多次处理。

import multiprocessing as mp

import re

from urllib.request import urlopen,urljoin

from bs4 import BeautifulSoup

import re

import time

base_url = “https://mofanpy.com/”

def crawl(url):

response = urlopen(url)

time.sleep(0.1)

return response.read().decode()

def parse(html):

soup = BeautifulSoup(html, “lxml”)

urls = soup.find_all(‘a’, {“href”:re.compile(‘^/.+?/$’)})

title = soup.find(‘h1’).get_text().strip()

page_urls = set([urljoin(base_url, url[‘href’]) for url in urls])

url = soup.find(‘meta’,{‘property’:’og:url’})[‘content’]

return title, page_urls, url

unseen = set([base_url,])

seen = set()

count , t1 = 1, time.time()

while len(unseen)!=0:

if len(seen) > 20:

break;

print(“Crawing”)

htmls = [crawl(url) for url in unseen]

print(“parsing”)

results = [parse(html) for html in htmls]

print(“ananyisc”)

seen.update(unseen)

unseen.clear()

for title, page_urls, url in results:

count += 1

unseen.update(page_urls – seen)

print(“total time:%.1f ” % (time.time()-t1), )

加入线程池之后,可以使用多线程方式进行处理

import multiprocessing as mp

import re

from urllib.request import urlopen,urljoin

from bs4 import BeautifulSoup

import re

import time

base_url = “https://mofanpy.com/”

def crawl(url):

response = urlopen(url)

time.sleep(0.1)

return response.read().decode()

def parse(html):

soup = BeautifulSoup(html, “lxml”)

urls = soup.find_all(‘a’, {“href”:re.compile(‘^/.+?/$’)})

title = soup.find(‘h1’).get_text().strip()

page_urls = set([urljoin(base_url, url[‘href’]) for url in urls])

url = soup.find(‘meta’,{‘property’:’og:url’})[‘content’]

return title, page_urls, url

unseen = set([base_url,])

seen = set()

# 增加线程池

pool = mp.Pool(4)

count , t1 = 1, time.time()

while len(unseen)!=0:

if len(seen) > 10:

break;

print(“Crawing”)

# 新增部分

crawl_jobs = [pool.apply_async(crawl, args=(url, )) for url in unseen]

htmls = [j.get() for j in crawl_jobs]

# htmls = [crawl(url) for url in unseen]

print(“parsing”)

parse_jobs = [pool.apply_async(parse, args=(html, )) for html in htmls]

results = [j.get() for j in parse_jobs]

# results = [parse(html) for html in htmls]

print(“ananyisc”)

seen.update(unseen)

unseen.clear()

for title, page_urls, url in results:

count += 1

unseen.update(page_urls – seen)

print(“total time:%.1f ” % (time.time()-t1), )

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试