- 网易新闻

- 爬虫

- python

注释挺详细了,直接上全部代码,欢迎各位大佬批评指正。

from selenium import webdriver from selenium.webdriver.chrome.options import Options from selenium.webdriver.common.by import By from time import sleep from lxml import etree import os import requests import csv

# 创建一个无头浏览器对象 chrome_options = Options() # 设置它为无框模式 chrome_options.add_argument('–headless') # 如果在windows上运行需要加代码 chrome_options.add_argument('–disable-gpu') browser = webdriver.Chrome(chrome_options=chrome_options) # 设置一个10秒的隐式等待 browser.implicitly_wait(10) # 使用谷歌无头浏览器来加载动态js def start_get(url,news_type): browser.get(url) sleep(1) # 翻到页底 browser.execute_script('window.scrollTo(0,document.body.scrollHeight)') sleep(1) # 点击加载更多 more_btn = browser.find_elements(By.CSS_SELECTOR, '.load_more_btn') if more_btn: try: more_btn[0].click() except Exception as e: print(e) print('继续….') sleep(1) # 再次翻页到底 browser.execute_script('window.scrollTo(0,document.body.scrollHeight)') # 拿到页面源代码 source = browser.page_source parse_page(source)

# 对新闻列表页面进行解析 def parse_page(html): # 创建etree对象 tree = etree.HTML(html) new_lst = tree.xpath('//div[@class="news_title"]//a') for one_new in new_lst: title = one_new.xpath('./text()')[0] link = one_new.xpath('./@href')[0] try: write_in(title, link,news_type) except Exception as e: print(e)

# 将其写入到文件 def write_in(title, link,news_type): alist = [] print('开始写入新闻{}'.format(title)) browser.get(link) sleep(1) # 再次翻页到底 browser.execute_script('window.scrollTo(0,document.body.scrollHeight)') # 拿到页面源代码 source = browser.page_source tree = etree.HTML(source)

alist.append(news_type) alist.append(title)

con_link = link alist.append(con_link)

content_lst = tree.xpath('//div[@class="post_text"]//p') con = '' if content_lst: for one_content in content_lst: if one_content.text: con = con + '\n' + one_content.text.strip() alist.append(con)

post_time_source = tree.xpath('//div[@class="post_time_source"]')[0].text if "来源:" in post_time_source: post_time = post_time_source.split("来源:")[0] alist.append(post_time)

else: post_time = post_time_source[0].text alist.append(post_time) post_source = tree.xpath('//div[@class="post_time_source"]/a[@id="ne_article_source"]')[0].text alist.append(post_source)

# browser.get(url) tiecount = tree.xpath('//a[@class="js-tiecount js-tielink"]')[0].text alist.append(tiecount)

tiejoincount = tree.xpath('//a[@class="js-tiejoincount js-tielink"]')[0].text alist.append(tiejoincount)

# 1. 创建文件对象 f = open('网易.csv', 'a+', encoding='utf-8',newline='') # 2. 基于文件对象构建 csv写入对象 csv_writer = csv.writer(f) # 3. 构建列表头 # csv_writer.writerow(["姓名", "年龄", "性别"]) # 4. 写入csv文件内容 print(alist) csv_writer.writerow(alist) f.close()

if __name__ == '__main__': urls = browser.find_elements_by_xpath('//a[@ne-role="tab-nav"]') links =[] for one in urls: url = one.get_attribute('href') links.append(url)

for one in range(len(links)): if 'http' in links[one]: # headers = {"User-Agent": 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.79 Safari/537.36'} if not os.path.exists('new'): os.mkdir('new') news_type = urls[one].text start_get(links[one],news_type)

browser.quit()

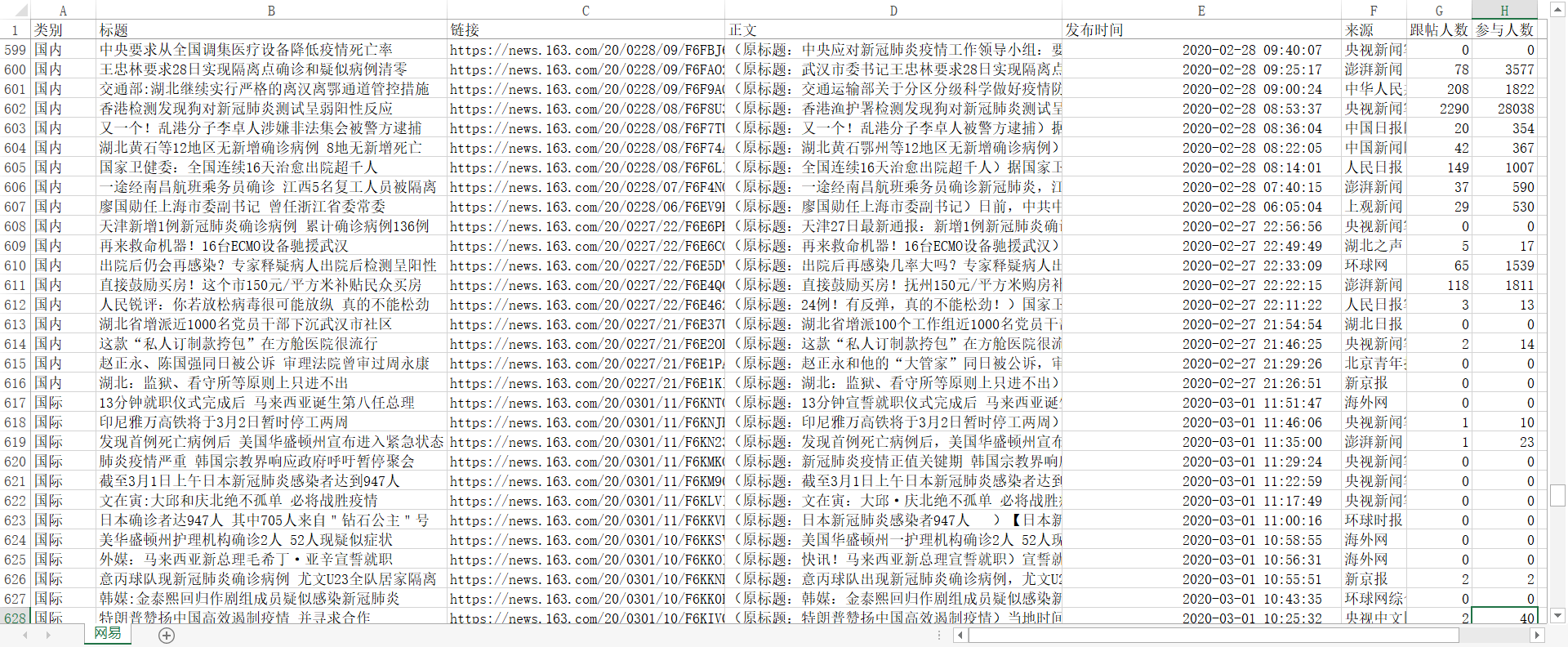

结果如下:

注:本文仅用于技术交流,不得用于商业用途。不遵守者,与本文作者无关。

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试