文章目录

-

- 1.爬取网站图片

- 2.Fofa信息搜集

-

- (1) 常规获取

- (2) API 获取

1.爬取网站图片

爬取一个美图网,按照网站主页导航栏分类,每个类别仅爬取前5页内容,代码如下:

import os, requests, re from bs4 import BeautifulSoup

# 携带cookie作为登录凭证 headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:88.0) Gecko/20100101 Firefox/88.0"}

# 版权问题无法使用真实网址 url = "https://www.xxxx.net"

def get_imagename(image_url):

return re.search(r"/([0-9-a-zA-Z]*\.jpg)$", image_url).group(1)

def save_images(pathname, page_url):

if os.listdir().count(pathname) == 0: os.mkdir(pathname)

# 访问页码链接 res = requests.get(page_url) soup = BeautifulSoup(res.content.decode("gbk"), "html.parser")

# 将图片逐一存储到新建目录 for i in soup.find_all("img"): image_url = i["src"] image_name = get_imagename(image_url) with open(pathname + '\\' + image_name, "wb") as f: f.write(requests.get(image_url).content) f.close()

def get_category_images(tag):

category_url = tag["href"] category = tag["title"]

# 访问导航栏 res = requests.get(category_url, headers=headers) soup = BeautifulSoup(res.content.decode("gbk"), "html.parser")

# 获取图片页码链接列表,设定最多只获取5页 page_num = 0 pages = [] for i in soup.find_all("a", target="_self"): prefix = re.match(r"^https://www.xxxx.net/r/\d/", category_url).group() pages.append(prefix + i["href"]) page_num += 1 if page_num == 5: break

# 创建导航栏类别命名的文件夹,将每页图片存储 for i in pages: save_images(category, i)

def get_images():

# 访问主页 res = requests.get(url, headers=headers) soup = BeautifulSoup(res.content.decode("gbk"), "html.parser")

# 获取导航栏分类标签列表,不包括“主页” category = soup.find_all("a", class_="MainNav", title=re.compile(".*")) # 获取并存储导航栏类别链接中的图片 for i in category: get_category_images(i)

if __name__ == "__main__": get_images()

运行结果:

2.Fofa信息搜集

对于提供API的网站,可以参考其API文档方便快速获取信息,但对于没有提供API的网站,或者发现通过API提取的信息并不完整,或者需要付费,这时仍需要通过分析请求数据的流程,利用常规爬虫搜集信息。这里以Fofa为例,介绍两种爬取信息的方式:

(1) 常规获取

首先在浏览器利用开发工具跟踪网页请求和回复,发现登录界面需要验证码,为方便起见登录后记录cookie值作为登录凭证使用,注意cookie有效时间,使用爬虫时最好重新登录使用最新的cookie,代码如下:

import base64, requests, time, random from bs4 import BeautifulSoup

# 携带cookie作为登录凭证 headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:88.0) Gecko/20100101 Firefox/88.0", "Cookie": "befor_router=%2F; " \ "fofa_token=xxxxxx" \ "refresh_token=xxxxxx" }

# Fofa查询关键字 query = "domain=\"baidu.com\""

# 根据请求规则,qbase64为查询关键字的base64编码 # base64.b64encode编码后返回字节,需要编码转换为字符串 query_encode = query.encode("utf-8") url = "https://fofa.so/result?qbase64=" + str(base64.b64encode(query_encode), "utf8")

# 定义列数不匹配异常 # 爬虫只能针对站点信息进行扫描,如果查询资产的IP或域名链接不能访问则会报错 MatchError = Exception("url_list does not match the number of columns ip_list, replace the query keyword… ")

# 随机延时 def time_sleep(): random_time = random.uniform(3.0, 10.0) time.sleep(random_time)

# 获取当前页面(url,ip)列表 def fetch_url(soup): # (url,ip)列表 target_list = []

# 获取包含url的标签对象列表、 # url列表最后一个为无关对象:粤ICP备16088626号,需要将其移除 url_list = soup.find_all("a", target="_blank") url_list.remove(soup.find_all("a", target="_blank").pop())

# 获取包含ip的标签对象列表 div_list = soup.find_all("div", class_ = "contentLeft") ip_list = [] for i in div_list: ip_list.append(i.find("a"))

# 验证url与ip的标签对象列表一一对应 if len(url_list) == len(ip_list): # 遍历获取(url,ip)列表 for i in range(len(url_list)): target = (url_list[i].contents[0], ip_list[i].string) target_list.append(target) return target_list

else: raise MatchError

def search(query): # (url,ip)列表 target_list = []

# 发送查询请求 res = requests.get(url, headers=headers) soup = BeautifulSoup(res.content.decode(), "html.parser")

# 查询条目总数 # 条目数可能是1,533形式,需要将','去除 item_total_num_str = soup.find("span", class_ = "pSpanColor").string item_total_num =int(item_total_num_str.replace(',', '')) pages_list = soup.find_all("li", class_=["number active", "number"])

# 查询页码总数 pages_total_num = int(pages_list.pop().string)

if item_total_num == 0: print("Reasults:0\n") return

# 因为Fofa普通注册用户只能查询50条数据,所以需要判断条目总数 elif item_total_num <= 50: item_query_num = item_total_num for i in range(pages_total_num): res = requests.get(url + "&page=%d&page_size=10"%(i+1), headers=headers) soup = BeautifulSoup(res.content.decode(), "html.parser")

# 循环获取每页的查询结果 for j in fetch_url(soup): target_list.append(j)

# 延时,避免频繁请求 time_sleep()

else: item_query_num = 50 for i in range(5): res = requests.get(url + "&page=%d&page_size=10"%(i+1), headers=headers) soup = BeautifulSoup(res.content.decode(), "html.parser")

# 循环获取每页的查询结果 for j in fetch_url(soup): target_list.append(j)

# 延时,避免频繁请求 time_sleep()

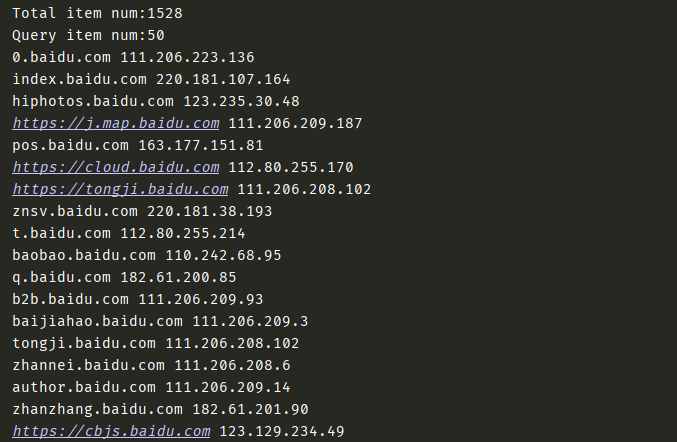

print("Total item num:%d" % item_total_num) print("Query item num:%d" % item_query_num) for i in target_list: print(i[0], i[1])

if __name__ == "__main__": search(query)

运行结果:

(2) API 获取

使用Fofa API需要开通会员或充值,使用方法参考 Fofa API文档,思路与常规爬虫相同,而且因为返回的是JSON数据,提取数据更加简单高效,代码如下:

import base64, requests, json

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:88.0) Gecko/20100101 Firefox/88.0"}

# Fofa查询关键字 query = "domain=\"baidu.com\""

# Fofa API api = "https://fofa.so/api/v1/search/all"

# 根据请求规则,qbase64为查询关键字的base64编码 # base64.b64encode编码后返回字节,需要编码转换为字符串 query_encode = query.encode("utf-8") query_qbase64 = str(base64.b64encode(query_encode), "utf8")

params = { # 注册和登陆时填写的email "email": "xxxxxx@xxxxxx", # 会员查看个人资料页面可得到key,为32位hash值 "key": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx", # 经过base64加密的查询字符串 "qbase64": query_qbase64, # 翻页数,默认为第一页 "page": 1, # 每次查询返回记录数,默认100条 "size": 20, # 可选查询字段,默认为host,可选参数包括: # title, ip, domain, port, country, province, city, country_name, header, server, protocol # banner, cert, isp, as_number, as_organization, latitude, longitude, structinfo, icp "fields":"host,ip,country" }

def search(query):

# 发送查询请求 res = requests.get(api, headers=headers, params=params)

# 返回JSON数据格式: # { # "mode": "extended", # "error": false, # "query": "domain=\"nosec.org\"\n", # "page": 1, # "size": 6, # "results": [ # ["https://i.nosec.org"],["https://nosec.org"], # ] # } query_results = json.loads(res.content.decode())

for i in query_results["results"]: print(i)

if __name__ == "__main__": search(query)

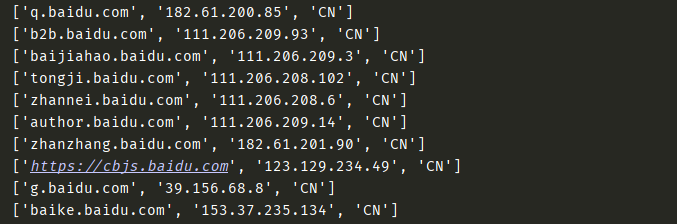

运行结果:

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试