1、数据库用表:

CREATE TABLE `t_arctype` ( `id` int(11) NOT NULL AUTO_INCREMENT, `typeName` varchar(50) DEFAULT NULL, `sortNo` int(11) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

insert into `t_arctype` (`id`, `typeName`, `sortNo`) values('0','暂无分类','4'); insert into `t_arctype` (`id`, `typeName`, `sortNo`) values('1','java技术','1'); insert into `t_arctype` (`id`, `typeName`, `sortNo`) values('2','网页技术','2'); insert into `t_arctype` (`id`, `typeName`, `sortNo`) values('4','数据库技术','3');

CREATE TABLE `t_article` ( `id` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(200) DEFAULT NULL, `content` longtext, `summary` varchar(400) DEFAULT NULL, `crawlerDate` datetime DEFAULT NULL, `clickHit` int(11) DEFAULT NULL, `typeId` int(11) DEFAULT NULL, `tags` varchar(200) DEFAULT NULL, `orUrl` varchar(1000) DEFAULT NULL, `state` int(11) DEFAULT NULL, `releaseDate` datetime DEFAULT NULL, PRIMARY KEY (`id`), KEY `typeId` (`typeId`), CONSTRAINT `t_article_ibfk_1` FOREIGN KEY (`typeId`) REFERENCES `t_arctype` (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

2、所需依赖包:

<!– jdbc驱动包 –> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.44</version> </dependency>

<!– 添加Httpclient支持 –> <dependency> <groupId>org.apache.httpcomponents</groupId> <artifactId>httpclient</artifactId> <version>4.5.2</version> </dependency>

<!– 添加jsoup支持 –> <dependency> <groupId>org.jsoup</groupId> <artifactId>jsoup</artifactId> <version>1.10.1</version> </dependency>

<!– 添加日志支持 –> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.16</version> </dependency>

<!– 添加ehcache支持 –> <dependency> <groupId>net.sf.ehcache</groupId> <artifactId>ehcache</artifactId> <version>2.10.3</version> </dependency>

<!– 添加commons io支持 –> <dependency> <groupId>commons-io</groupId> <artifactId>commons-io</artifactId> <version>2.5</version> </dependency>

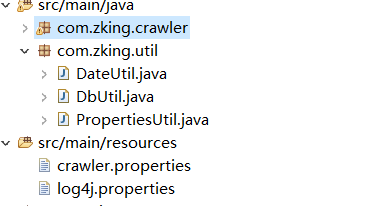

3、所需工作包:

3-1:DateUtil 类

package com.zking.util;

import java.text.SimpleDateFormat; import java.util.Date;

/** * 日期工具类 * @author user * */ public class DateUtil {

/** * 获取当前年月日路径 * @return * @throws Exception */ public static String getCurrentDatePath()throws Exception{ Date date=new Date(); SimpleDateFormat sdf=new SimpleDateFormat("yyyy/MM/dd"); return sdf.format(date); }

public static void main(String[] args) { try { System.out.println(getCurrentDatePath()); } catch (Exception e) { // TODO Auto-generated catch block e.printStackTrace(); } } }

3-2:DbUtil 类

package com.zking.util;

import java.sql.Connection; import java.sql.DriverManager;

/** * 数据库工具类 * @author user * */ public class DbUtil {

/** * 获取连接 * @return * @throws Exception */ public Connection getCon()throws Exception{ Class.forName(PropertiesUtil.getValue("jdbcName")); Connection con=DriverManager.getConnection(PropertiesUtil.getValue("dbUrl"), PropertiesUtil.getValue("dbUserName"), PropertiesUtil.getValue("dbPassword")); return con; }

/** * 关闭连接 * @param con * @throws Exception */ public void closeCon(Connection con)throws Exception{ if(con!=null){ con.close(); } }

public static void main(String[] args) { DbUtil dbUtil=new DbUtil(); try { dbUtil.getCon(); System.out.println("数据库连接成功"); } catch (Exception e) { // TODO Auto-generated catch block e.printStackTrace(); System.out.println("数据库连接失败"); } } }

3-3:PropertiesUtil 类

package com.zking.util;

import java.io.IOException; import java.io.InputStream; import java.util.Properties;

/** * properties工具类 * @author user * */ public class PropertiesUtil {

/** * 根据key获取value值 * @param key * @return */ public static String getValue(String key){ Properties prop=new Properties(); InputStream in=new PropertiesUtil().getClass().getResourceAsStream("/crawler.properties"); try { prop.load(in); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } return prop.getProperty(key); } }

3-4:crawler.properties

注:ehcacheXmlPath :为本地ehcache.xml 文件路径,二级缓存

blogImages:本地图片化路径

dbUrl=jdbc:mysql://localhost:3306/blog?autoReconnect=true dbUserName=root dbPassword=123 jdbcName=com.mysql.jdbc.Driver ehcacheXmlPath=D://myDome/ehcache.xml blogImages=D://myDome/blogImages/

3-5:log4j.properties

log4j.rootLogger=INFO, stdout,D

#Console log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.Target = System.out log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=[%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

#D log4j.appender.D = org.apache.log4j.RollingFileAppender log4j.appender.D.File = D://myDome/bloglogs/log.log log4j.appender.D.MaxFileSize=100KB log4j.appender.D.MaxBackupIndex=100 log4j.appender.D.Append = true log4j.appender.D.layout = org.apache.log4j.PatternLayout log4j.appender.D.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] – [ %p ] %m%n

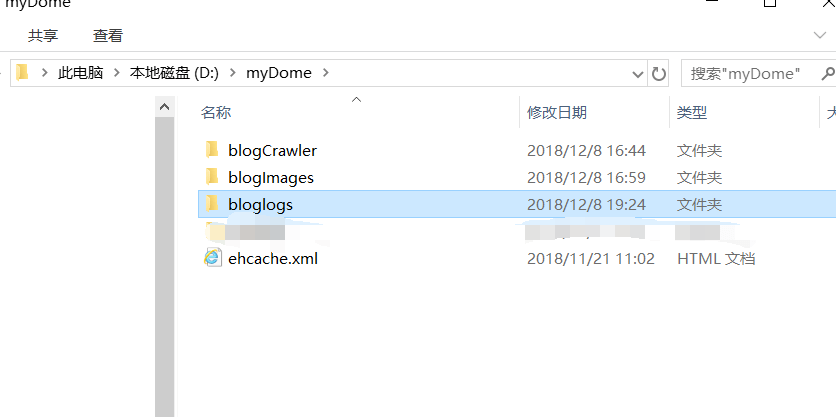

4、本地路径:

5、主要源码:

5-1:通用源码:

注意:主要代码开始——————–以下是属于一个Demo中:

/** * 记录当前日志 */ private static Logger logger = Logger.getLogger(BlogCrawlerStarter.class); /** * 解析csdn博客最新文章首页地址 */ private static String HomeURL = "https://www.csdn.net/nav/newarticles";

private static CloseableHttpClient httpClient;

//数据库连接 private static Connection con;

//缓存管理类 private static CacheManager cacheManager; //缓存的槽 private static Cache cache;

5-2: 解析首页,获取网站里面所有内容(httpClient)

/** * 解析首页,获取网站里面所有内容(httpClient) */ public static void parseHomePage() { logger.info("开始爬取首页:"+HomeURL);

//缓存初始化 //根据配置创建 cacheManager = CacheManager.create(PropertiesUtil.getValue("ehcacheXmlPath")); cache = cacheManager.getCache("cnblog");

httpClient = HttpClients.createDefault(); HttpGet httpGet = new HttpGet(HomeURL);

//设置爬虫时间 连接操作时间 5秒,等待时间8秒 RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build(); httpGet.setConfig(config); CloseableHttpResponse response = null; try {

response = httpClient.execute(httpGet); //如果response为空 if(response == null) { logger.info(HomeURL+":爬取无响应"); return; }

//response有网站内容 //判断是否相应正常,如果正常则获取 if(response.getStatusLine().getStatusCode() ==200) { //网络实体 HttpEntity entity = response.getEntity(); //获取首页内容 String homePageContext = EntityUtils.toString(entity,"utf-8");

//解析首页内容: parseHomePageContent(homePageContext);

}

} catch (ClientProtocolException e) { //把异常写入日志里面去 logger.error(HomeURL+"``ClientProtocolException",e); } catch (IOException e) { logger.error(HomeURL+"``IOException",e); }finally { try {//关闭响应 if(response != null) {//如果不为空 //关闭response response.close(); }

if(httpClient != null) {//如果不为空 //关闭httpClient httpClient.close(); } } catch (IOException e) { logger.error(HomeURL+"``IOException",e); } }

//如果是开启状态,就刷新 if(cache.getStatus() == Status.STATUS_ALIVE) { cache.flush(); }

//关闭槽 cacheManager.shutdown();

logger.info("结束爬取首页:"+HomeURL);

}

5-3:通过网络爬虫框架jsoup,解析网页内容。获取想要的数据(博客的链接)

/** * 通过网络爬虫框架jsoup,解析网页内容。获取想要的数据(博客的链接) * @param homePageContext */ private static void parseHomePageContent(String homePageContext) { //通过jQuery选择器进行选取 Document doc = Jsoup.parse(homePageContext); //从最低层往上找 :获取链接 Elements aEles = doc.select("#feedlist_id .list_con .title h2 a"); //遍历:找到最新的博客链接 for (Element aEle : aEles) { String blogUrl = aEle.attr("href"); //如果地址为空 if(null == blogUrl || "".equals(blogUrl)) { logger.info("该博客无内容,不再爬取插入数据库"); continue;//结束爬取 }

//如果数据库已经存在该数据 if(cache.get(blogUrl) != null) { logger.info("该数据已经被爬取到数据库中,不再爬取插入数据库"); continue;//结束爬取 }

parseBlogUrl(blogUrl); } }

5-4:通过博客地址获取博客的标题,以及博客的内容

/** * 通过博客地址获取博客的标题,以及博客的内容 * @param blogUrl */ private static void parseBlogUrl(String blogUrl) { logger.info("开始爬取博客网页:"+blogUrl); httpClient = HttpClients.createDefault(); HttpGet httpGet = new HttpGet(blogUrl);

//设置爬虫时间 连接操作时间 5秒,等待时间8秒 RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build(); httpGet.setConfig(config); CloseableHttpResponse response = null; try {

response = httpClient.execute(httpGet); //如果response为空 if(response == null) { logger.info(blogUrl+":爬取无响应"); return; }

//response有网站内容 //判断是否相应正常,如果正常则获取 if(response.getStatusLine().getStatusCode() ==200) { //网络实体 HttpEntity entity = response.getEntity(); //获取博客内容 String blogPageContent = EntityUtils.toString(entity,"utf-8"); //解析博客内容 parseBlogContent(blogPageContent,blogUrl); }

} catch (ClientProtocolException e) { //把异常写入日志里面去 logger.error(blogUrl+"``ClientProtocolException",e); } catch (IOException e) { logger.error(blogUrl+"``IOException",e); }finally { try {//关闭响应 if(response != null) {//如果不为空 //关闭response response.close(); }

} catch (IOException e) { logger.error(blogUrl+"``IOException",e); } }

logger.info("结束爬取博客网页:"+blogUrl);

}

5-5:解析博客内容,获取博客中 的标题,以及所有内容,包含图片

/** * 解析博客内容,获取博客中 的标题,以及所有内容 * @param blogPageContext */ private static void parseBlogContent(String blogContext,String linkUrl) { Document doc = Jsoup.parse(blogContext); //获取博客标题 Elements titleEles = doc.select("#mainBox main .article-header-box .article-header .article-title-box h1"); //如果标题为空,则不插入数据库 if(titleEles.size() == 0) { logger.info("博客标题为空:不插入数据库!"); return; } String title = titleEles.get(0).html();

//获取对应的博客内容 Elements blogcontentEles = doc.select("#content_views"); if(blogcontentEles.size() == 0) { logger.info("博客内容为空:不插入数据库!"); return; } String blogcontentdBody = blogcontentEles.get(0).html();

//获取对应的博客图片 Elements imgEles = doc.select("img");

//把图片放list List<String> imgUrlList = new LinkedList<String>(); if(imgEles.size() > 0) { for (Element imgEle : imgEles) { imgUrlList.add(imgEle.attr("src")); } }

if(imgUrlList.size() > 0) { //图片本地化 Map<String, String> replaceUrlMap= downloadImgList(imgUrlList); blogContext = replaceContent(blogContext,replaceUrlMap); }

//插入数据库 String sql = "insert into t_article values(null,?,?,null,now(),0,0,null,?,0,null)"; //初始化 try { //预定义对象 PreparedStatement pst = con.prepareStatement(sql); pst.setObject(1, title); pst.setObject(2,blogcontentdBody); pst.setObject(3, linkUrl); if(pst.executeUpdate() == 0) { logger.info("爬取博客信息插入数据库失败"); }else { cache.put(new net.sf.ehcache.Element(linkUrl, linkUrl)); logger.info("爬取博客信息插入数据库成功"); } } catch (SQLException e) { logger.info("数据库异常–SQLException",e); }

}

5-6:将别人的博客内容进行加工,将原有的图片地址换成本地的图片地址

/** * 将别人的博客内容进行加工,将原有的图片地址换成本地的图片地址 * @param blogContext * @param replaceUrlMap * @return */ private static String replaceContent(String blogContext, Map<String, String> replaceUrlMap) { for(Map.Entry<String, String> entry: replaceUrlMap.entrySet()) { blogContext =blogContext.replace(entry.getKey(), entry.getValue()); } return null; }

5-7:别人服务器本地化

/** * 别人服务器本地化 * @param imgUrlList * @return */ private static Map<String, String> downloadImgList(List<String> imgUrlList) { Map<String, String> replaceMap = new HashMap<String, String>(); for (String imgUrl : imgUrlList) { CloseableHttpClient httpClient = HttpClients.createDefault(); HttpGet httpGet = new HttpGet(imgUrl);

//设置爬虫时间 连接操作时间 5秒,等待时间8秒 RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build(); httpGet.setConfig(config); CloseableHttpResponse response = null; try {

response = httpClient.execute(httpGet); //如果response为空 if(response == null) { logger.info(imgUrl+":爬取无响应"); }else { //response有网站内容 //判断是否相应正常,如果正常则获取 if(response.getStatusLine().getStatusCode() ==200) { //网络实体 HttpEntity entity = response.getEntity();

//图片存放地址 String blogImagesPath = PropertiesUtil.getValue("blogImages"); //按日期存放文件夹

String dateDir = DateUtil.getCurrentDatePath(); //生成的图片不能重复 String uuid = UUID.randomUUID().toString(); //图片后缀 String subfix= entity.getContentType().getValue().split("/")[1]; //文件名 String fileName = blogImagesPath + dateDir +"/"+uuid+"."+subfix;

//文件流 FileUtils.copyToFile(entity.getContent(), new File(fileName));

replaceMap.put(imgUrl, fileName); } }

} catch (ClientProtocolException e) { //把异常写入日志里面去 logger.error(imgUrl+"``ClientProtocolException",e); } catch (Exception e) { logger.error(imgUrl+"``IOException",e); }finally { try {//关闭响应 if(response != null) {//如果不为空 //关闭response response.close(); }

} catch (IOException e) { logger.error(imgUrl+"``IOException",e); } }

} return replaceMap; }

5-8:初始化工具包

//初始化工具包 public static void start() { //循环 while(true) { DbUtil dbUtil = new DbUtil(); //获取连接 try { con = dbUtil.getCon(); parseHomePage(); } catch (Exception e) { logger.error("数据库连接失败"); }finally { try { if(con != null) {//关闭连接 con.close(); } } catch (SQLException e) { logger.info("关闭异常–SQLException",e); } } //设置爬取时间 try { //每隔半分钟爬取一次 Thread.sleep(1000*60/2); } catch (InterruptedException e) { logger.info("主线程休眠–InterruptedException",e); } } }

5-9:运行:

public static void main(String[] args) { start(); }

注意:主要代码结束——————-

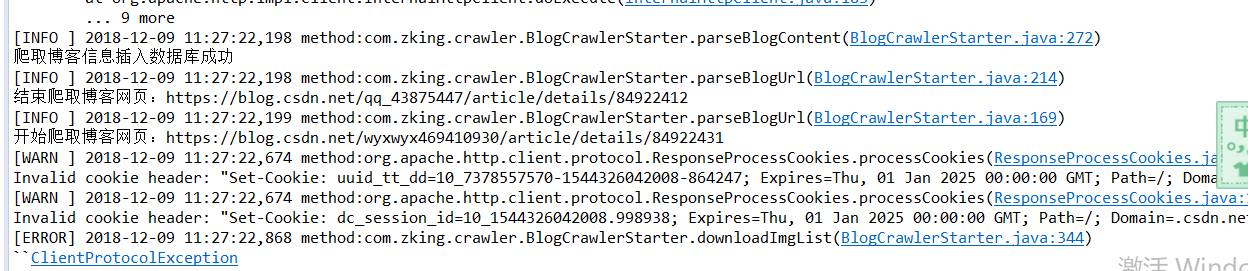

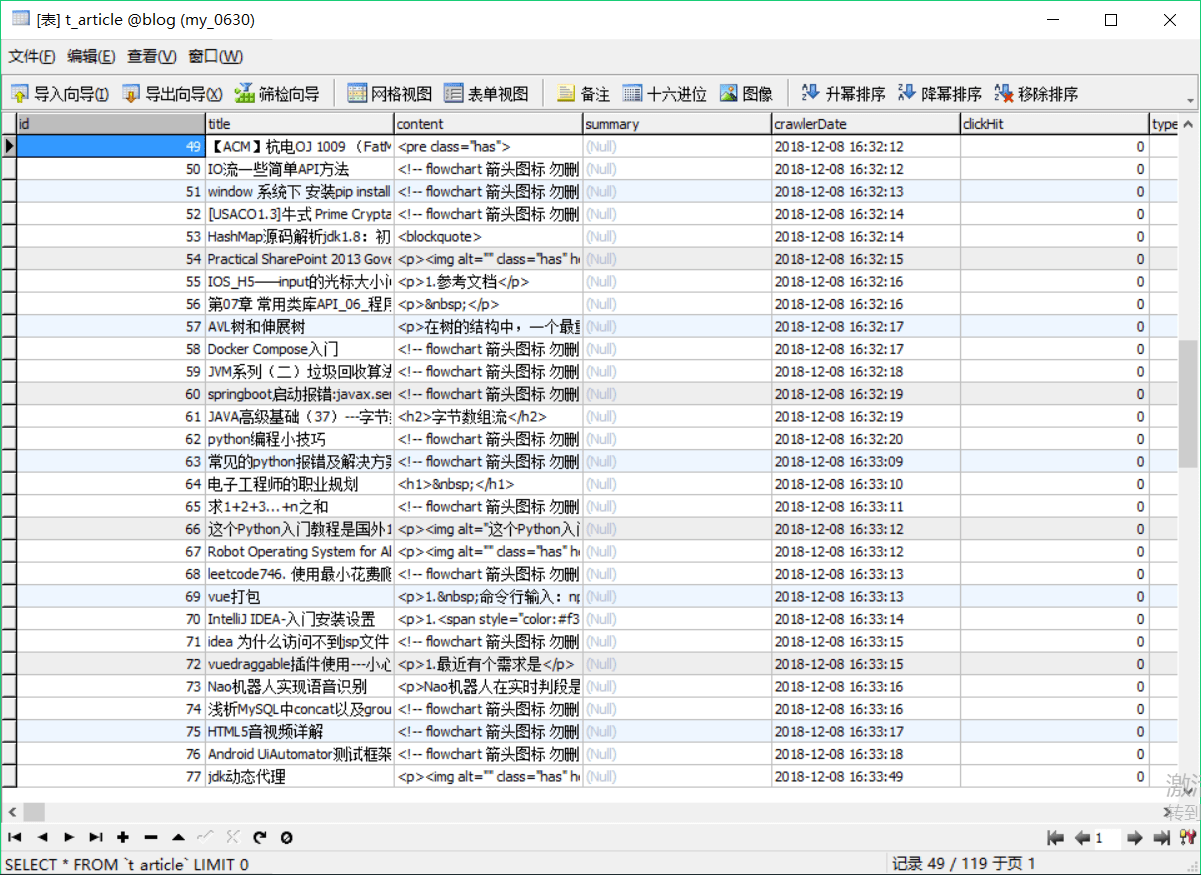

6、运行结果:

数据库插入数据:

本地化图片:

7:需要的二级缓存文件:ehcache.xml,需要的直接copy出去使用:

<?xml version="1.0" encoding="UTF-8"?>

<ehcache> <!– 磁盘存储:将缓存中暂时不使用的对象,转移到硬盘,类似于Windows系统的虚拟内存 path:指定在硬盘上存储对象的路径 –> <diskStore path="C:\blogCrawler\blogehcache" />

<!– defaultCache:默认的缓存配置信息,如果不加特殊说明,则所有对象按照此配置项处理 maxElementsInMemory:设置了缓存的上限,最多存储多少个记录对象 eternal:代表对象是否永不过期 overflowToDisk:当内存中Element数量达到maxElementsInMemory时,Ehcache将会Element写到磁盘中 –> <defaultCache maxElementsInMemory="1" eternal="true" overflowToDisk="true"/>

<cache name="cnblog" maxElementsInMemory="1" diskPersistent="true" eternal="true" overflowToDisk="true"/>

</ehcache>

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试