一、爬取简单的网页

1、打开cmd

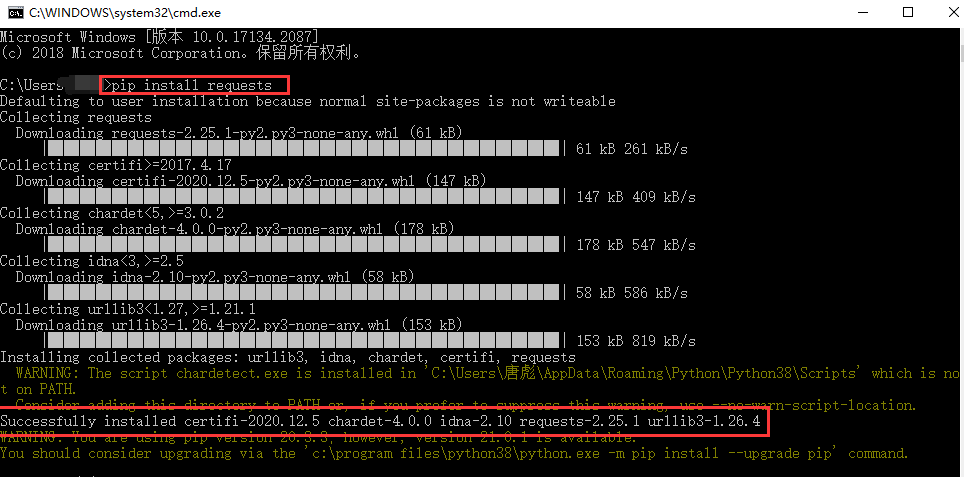

2、安装requests模块,输入pip install requests

3、新建一个.py文件,我们以https://www.bqkan.com这个网站为例,以下是爬取斗罗大陆的网页

import requests # 导入requests包

url = 'https://www.bqkan.com/3_3026/1343656.html' strHtml = requests.get(url) # Get方式获取网页数据 html = strHtml.text print(html)

二、爬取小说的某一章节

1、打开cmd,安装Beautiful Soup,输入pip install beautifulsoup4

2、对爬取出来的数据进行数据清洗,代码如下:

# 爬虫爬取网页 import requests # 导入requests包 from bs4 import BeautifulSoup

url = 'https://www.bqkan.com/3_3026/1343656.html' strHtml = requests.get(url) # Get方式获取网页数据 html = strHtml.text bf = BeautifulSoup(html,"html.parser") texts = bf.find_all('div', class_='showtxt') print(texts[0].text.replace('\xa0'*8,'\n\n'))

三、爬取整本小说

以斗罗大陆 URL=https://www.bqkan.com/3_3026/为例:

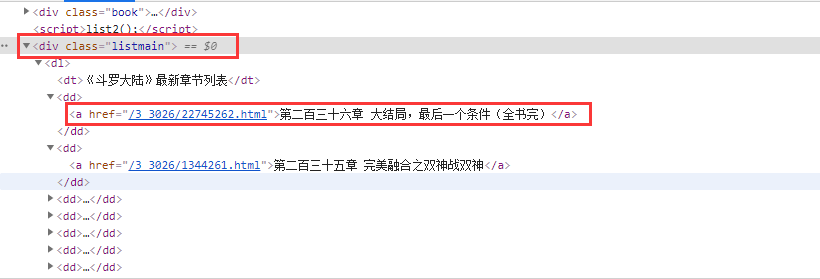

1、打开审查元素 F12,我们发现这些章节都存在于div标签下的class属性为listmain中,并且都是通过和https://www.bqkan.com/3_3026进行拼接的网址:

2、于是,我们修改原有代码,如下图所示,就可以获得所有章节的href中的地址:

import requests # 导入requests包 from bs4 import BeautifulSoup

url = 'https://www.bqkan.com/3_3026' strHtml = requests.get(url) # Get方式获取网页数据 html = strHtml.text bf = BeautifulSoup(html,"html.parser") div = bf.find_all('div', class_='listmain') print(div[0])

3、通过Beautiful Soup对数据进行清洗,获得每个章节的完整链接,代码如下:

import requests # 导入requests包 from bs4 import BeautifulSoup

source = "https://www.bqkan.com/" url = 'https://www.bqkan.com/3_3026' strHtml = requests.get(url) # Get方式获取网页数据 html = strHtml.text bf = BeautifulSoup(html, "html.parser") div = bf.find_all('div', class_='listmain') a_bf = BeautifulSoup(str(div[0]), "html.parser") a = a_bf.find_all("a") for item in a: print(item.string, source + item.get("href"))

4、获得到了每一章的完整链接,于是我们可以对该小说进行完整下载了,代码如下:

from bs4 import BeautifulSoup import requests

class downloader(object):

# 初始化 def __init__(self): self.server = 'http://www.biqukan.com' self.target = 'https://www.bqkan.com/3_3026' self.names = [] # 存放章节名 self.urls = [] # 存放章节链接 self.nums = 0 # 章节数

# 获取完整章节地址 def get_download_url(self): req = requests.get(url=self.target) html = req.text div_bf = BeautifulSoup(html, "html.parser") div = div_bf.find_all('div', class_='listmain') a_bf = BeautifulSoup(str(div[0]), "html.parser") a = a_bf.find_all("a") self.nums = len(a) # 统计章节数 for each in a: print(each.string,self.server + each.get('href')) self.names.append(each.string) self.urls.append(self.server + each.get('href'))

# 获取对应链接的地址 def get_contents(self, target): req = requests.get(url=target) html = req.text bf = BeautifulSoup(html, "html.parser") texts = bf.find_all('div', class_='showtxt') texts = texts[0].text.replace('\xa0' * 8, '\n\n') return texts

# 将内容写入磁盘中 def writer(self, name, path, text): write_flag = True with open(path, 'w', encoding='utf-8') as f: f.write(name + '\n') f.writelines(text) f.write('\n\n')

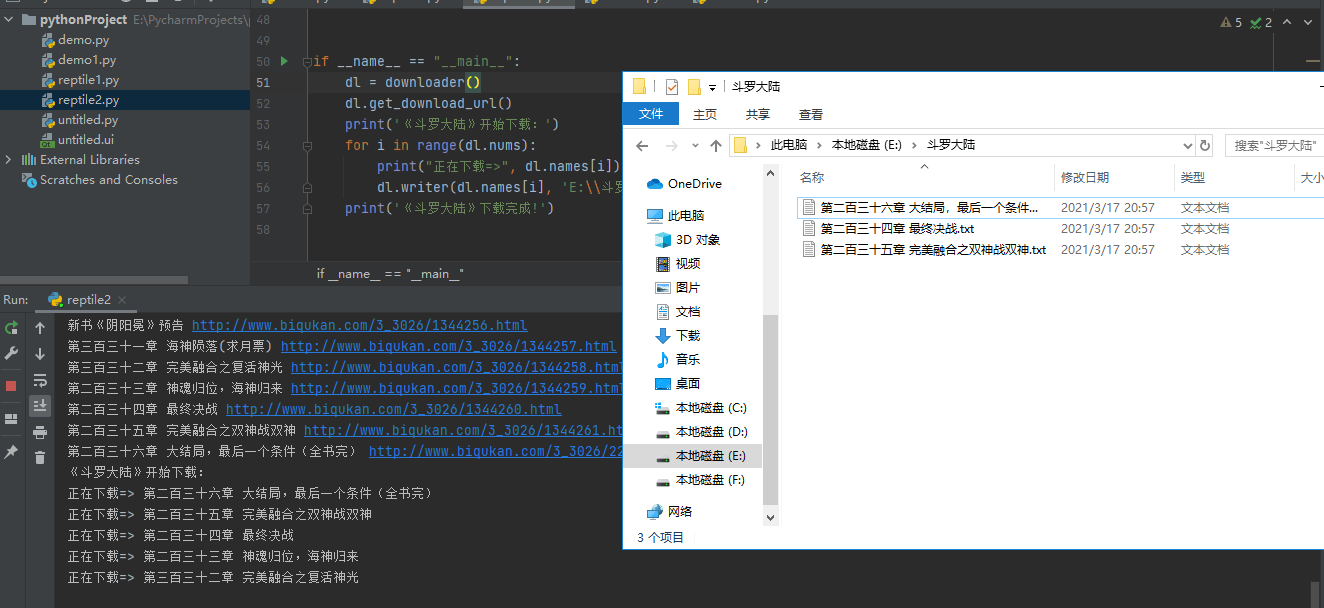

if __name__ == "__main__": dl = downloader() dl.get_download_url() print('《斗罗大陆》开始下载:') for i in range(dl.nums): print("正在下载=>", dl.names[i]) dl.writer(dl.names[i], 'E:\\斗罗大陆\\' + dl.names[i] + '.txt', dl.get_contents(dl.urls[i])) print('《斗罗大陆》下载完成!')

5、运行结果如图所示:

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试