目录

1. 引言

2. 要点

3. scrapy项目编写

3.1 创建项目structurae

3.2 scrapy shell 分析网站

3.3 编写items.py

3.4 编写structure.py

3.5 编写pipelines.py

3.6 编写settings.py

4. 运行结果

5. 结语

1. 引言

对于一名工程师,能够遇见Structurae.net绝对是一件非常幸运的事。很高兴,我在25岁幸运地遇见它。一如网站自序所言,Structruae希望能成为你搜索的起点,但通常不会是终点。在这里我们不一定总能找到自己想要的所有信息,但它希望能指引我们找到更多的地方和信息源,在那儿我们可能就能找到想要的。以下是关于Structurae的自序,喜欢的朋友可以读一读。

Structurae is a database for works of structural and civil engineering, but also contains many other works of importance or interest of the fields of architecture and public works. This site is mostly concerned with the structural aspects of the works documented here and the technical aspects of their construction and design. At the same time we do take into account the social, historic and architectural context.

The structures are chosen based on the interest they pose for the profession of the structural or civil engineer, but since that profession did not exist before the 18th century, many historic structures included here are firstly known for their architectural merits.

Thus this site offers you the most interesting constructions throughout history and of our time, but also more typical structures from around the world. But the interest is not only on the results of the construction process, but also on the actors involved — engineers, builders, architects, companies, …

Structurae wants to be a resource that serves the beginning of your search, but is most likely not going to be the end of it. You will not always find everything you are looking for, but we attempt to point you to more and more places and sources of information where you may be able to find what you are looking for.

Structurae was created in 1998 by Nicolas Janberg, structural and bridge engineer by training and profession, out of a "gallery of structures" he built for the course "Structures and the Urban Environment" (CIV 262) taught at Princeton University and for which he was a teaching assistant at the time. After continuing work on the site initially as a hobby while working as a bridge engineer, he made a job out of it in 2002.

2. 要点

- 使用Images Pipeline下载图片

- 使用openpyxl库保存数据为.xlsx文件

3. scrapy项目编写

3.1 创建项目structurae

在中,详细介绍过创建Scrapy项目的方法。项目命令的原则是,以网站的域名(domain)为基本,加或不加后缀。此处:

scrapy startproject structurae

3.2 scrapy shell 分析网站

3.3 编写items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items # # See documentation in: # http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class StructureItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() image_urls = scrapy.Field() images_paths = scrapy.Field() dir_name = scrapy.Field() data_number = scrapy.Field() data_name = scrapy.Field() data_year = scrapy.Field() data_location = scrapy.Field() data_status = scrapy.Field() pass

3.4 编写structure.py

在编程的过程,遇到不少问题。在解决这些问题的过程中,我们会对scrapy的架构,组件,组件在系统中是如何工作的有更深的理解。调试spider的技术有许多,比如parse命令、scrapy shell终端、在浏览器中打开,logging等,其实本质都是要获取spider运行信息,从中定位bug的位置,分析可能的原因。

在编写这个项目的爬虫代码时,遇到的一个困扰我比较就的问题是:生成的Item对象异常。结果异常的structure.py如下:

# -*- coding: utf-8 -*- import scrapy from structurae.items import StructureItem

class StructureSubcategoriesSpider(scrapy.Spider): name = 'structure_subcategories' # 爬取某些网站遇到HTTP 403错误,需要用到start_requests函数 headers = { 'User-Agent':'Mozilla/5.0 (Wiindows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36', }

def start_requests(self): url = 'https://structurae.net/structures/bridges-and-viaducts/composite-material-bridges/list' yield scrapy.Request(url = url,callback = self.parse)

# 以start_urls初始化Request时,parse()是默认的回调函数,无需指定。

# 解析response,获得列表中结构链接地址 def parse(self, response): selectors = response.xpath('//table/tr') item = StructureItem() for selctor in selectors: structure_url_suffix = selctor.xpath('./td[2]/a/@href').extract_first() structure_url = 'https://structurae.net' + structure_url_suffix item['data_number'] = selctor.xpath('td[1]/text()').extract_first() item['data_year'] = selctor.xpath('td[3]/text()').extract_first() item['data_location'] = selctor.xpath('td[4]/text()').extract_first() item['data_status'] = selctor.xpath('td[5]/text()').extract_first() yield scrapy.Request(url=structure_url, meta={'item': item}, callback=self.parse2)

# 翻页 next_url = response.xpath('//div[@class="nextPageNav"]/a[1]/@href').extract() if next_url is not None: next_url = 'https://structurae.net' + next_url[0] yield scrapy.Request(url=next_url, callback=self.parse)

# 爬取单个结构的页面 def parse2(self, response): item = response.meta['item'] item['image_urls'] = response.xpath('//figure/a/@href').extract() item['data_name'] = response.xpath('//div[@id="richscope"]/h1/text()').extract_first() yield item

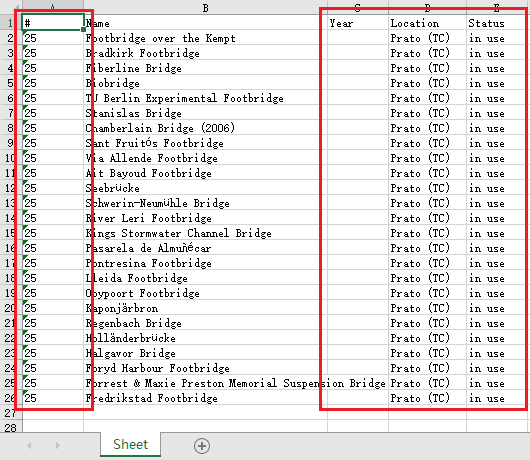

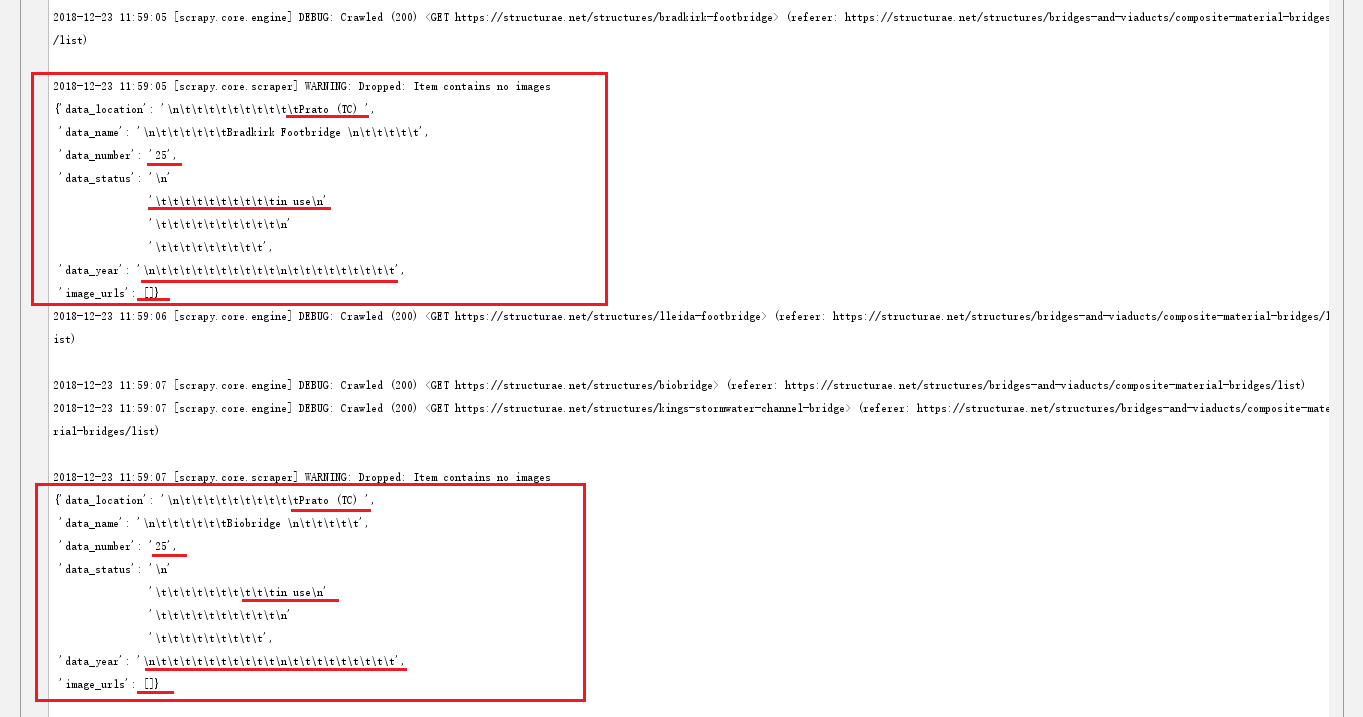

运行得到的Composite material bridges.xlsx中,序号(#)、Location和Status两列的内容是重复的,Year列是空的。这显然不是正确的结果。于是,我们查看log日志:

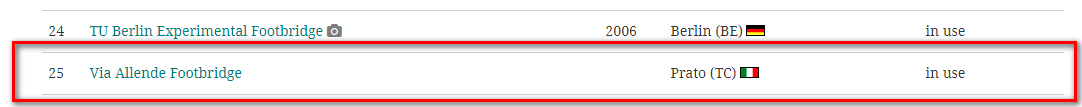

发现生成的所有item,字段data_location、data_number、data_status、data_year是一样的。进一步结合网站信息会发现,这些字段的值与最后一个结构的信息正好匹配。这不会是巧合。出现bug的原因很可能是生成item的代码哪里有错误。

item = StructureItem(),将类StructureItem实例化得到item对象放在for循环之前,因此程序由始至终仅产生了一个item对象,姑且称之为“itemA”。for循环实质上多次对ItemA的一个key放入value,后面的值把前面的值冲掉,最终的值就是"Via Allende Footbridge“的信息。正确的写法应该是,把item = StructureItem()放到for循环里面。通过下面的导图我们可以清楚地看到两种写法的区别。

item = StructureItem()放在for循环之前:仅产生了一个itemA,且多次对ItemA的一个key放入value,后面的值把前面的值冲掉。

item = StructureItem()放在for循环中:产生了多个item,且每个item的一个key赋值一次。

spider当中还有一句很有意思,可以仔细琢磨:

yield scrapy.Request(url=structure_url, meta={'item': item}, callback=self.parse2)

Request对象通过参数meta在不同的parse方法中传递信息。meta的用法可以参见Requests and Responses。讲解一下,meta的数据类型是字典dict。meta={'item': item},其实是一个很简单的语句,初始化字典meta,把item(数据类型也是一个字典)放入mata的item字段当中。设置meta就可以实现,以字典meta为载体,在不同的parse方法中传递item对象。

def parse2(self, response): item = response.meta['item'] item['image_urls'] = response.xpath('//figure/a/@href').extract() item['data_name'] = response.xpath('//div[@id="richscope"]/h1/text()').extract_first() yield item

可以看到,在parse2方法中,我们把meta传递的值恢复为字典item,然后进一步加以处理,最终生成Item对象。

在Request中通过meta传递信息在后面编写pipelines.py时会再次用到。

最终调试完成的爬虫代码structure.py如下:

# -*- coding: utf-8 -*- import scrapy from structurae.items import StructureItem

class StructureSubcategoriesSpider(scrapy.Spider): name = 'structure_subcategories' # 爬取某些网站遇到HTTP 403错误,需要用到start_requests函数 headers = { 'User-Agent':'Mozilla/5.0 (Wiindows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36', }

def start_requests(self): url = 'https://structurae.net/structures/bridges-and-viaducts/composite-material-bridges/list' yield scrapy.Request(url = url,callback = self.parse)

# 以start_urls初始化Request时,parse()是默认的回调函数,无需指定。

# 解析response,获得列表中结构链接地址 def parse(self, response): selectors = response.xpath('//table/tr') for selctor in selectors: item = StructureItem() structure_url_suffix = selctor.xpath('./td[2]/a/@href').extract_first() structure_url = 'https://structurae.net' + structure_url_suffix item['data_number'] = selctor.xpath('td[1]/text()').extract_first() item['data_year'] = selctor.xpath('td[3]/text()').extract_first() item['data_location'] = selctor.xpath('td[4]/text()').extract_first() item['data_status'] = selctor.xpath('td[5]/text()').extract_first() yield scrapy.Request(url=structure_url, meta={'item': item}, callback=self.parse2)

# 翻页 next_url = response.xpath('//div[@class="nextPageNav"]/a[1]/@href').extract() if next_url is not None: next_url = 'https://structurae.net' + next_url[0] yield scrapy.Request(url=next_url, callback=self.parse)

# 爬取单个结构的页面 def parse2(self, response): item = response.meta['item'] item['image_urls'] = response.xpath('//figure/a/@href').extract() item['data_name'] = response.xpath('//div[@id="richscope"]/h1/text()').extract_first() yield item

3.5 编写pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy from scrapy.pipelines.images import ImagesPipeline from scrapy.exceptions import DropItem from openpyxl import Workbook from structurae import settings import os import re

class StructureFilesPipeline(object): wb = Workbook() ws = wb.active ws.append(['#','Name','Year','Location','Status'])

def process_item(self, item, spider): number = item['data_number'].strip() name = item['data_name'].strip() year = item['data_year'].strip() location = item['data_location'].strip() status = item['data_status'].strip() line = [number,name,year,location,status] self.ws.append(line) self.wb.save('E:/structure/Composite material bridges/Composite material bridges.xlsx') return item

class StructureImagesPipeline(ImagesPipeline):

# def process_item(self, item, spider): # dir_path = '%s/%s' % (settings.IMAGES_STORE, item['data_name']) # if not os.path.exists(dir_path): # os.makedirs(dir_path) # return item

def get_media_requests(self, item, info): for image_url in item['image_urls']: # meta是重难点! yield scrapy.Request(url=image_url,meta={'item': item})

def item_completed(self, results, item, info): image_paths = [x['path'] for ok, x in results if ok] if not image_paths: raise DropItem("Item contains no images") # item['image_paths'] = image_paths return item

def file_path(self, request, response=None, info=None): item = request.meta['item'] dir_name = item['data_name'] dir_name = dir_name.strip() dir_strip = re.sub(r'[?\\*|"<>:/]','',str(dir_name)) image_name = request.url.split('/')[-1] filename = u'{0}/{1}'.format(dir_strip, image_name) return filename

3.6 编写settings.py

# Configure item pipelines # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { # 开启图片通道 'structurae.pipelines.StructureFilesPipeline': 1, 'structurae.pipelines.StructureImagesPipeline': 2, } # 图片存储路径 IMAGES_STORE = "E:/structure/Composite material bridges" MEDIA_ALLOW_REDIRECTS = True # 自动生成的内容是否遵守robots.txt规则,此处选择不遵守 ROBOTSTXT_OBEY = False # 此处禁止Cookies COOKIES_ENABLED = False # 下载延迟,这里使用250ms延时 DOWNLOAD_DELAY = 0.25

4. 运行结果

本项目代码已上传至我的Github,请大家移步:

在命令行模式下,进入项目的根目录,运行crawl命令。精美的图片blingbling涌来,善哉!

5. 结语

- 从structurae.net爬取的图片使用时,务必遵守版权规则;

- 程序请勿用于任何商业用途,仅供学习交流;

- 如有问题,请留言。如有错误,还望指正,谢谢!

本博客作为本人学习Python的经验贴,感谢大家的关注!

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试