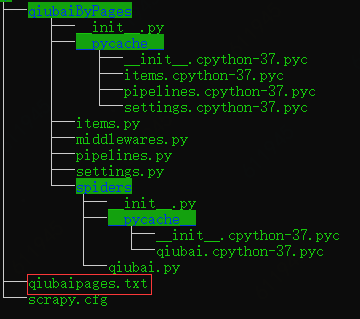

一、单页面爬取

# -*- coding: utf-8 -*- import scrapy from qiubaiByPages.items import QiubaibypagesItem

class QiubaiSpider(scrapy.Spider): name = 'qiubai' allowed_domains = ['www.qiushibaike.com/text'] start_urls = ['http://www.qiushibaike.com/text/']

def parse(self, response): div_list=response.xpath('//div[@id="content-left"]/div') for div in div_list: author=div.xpath("./div/a[2]/h2/text()").extract_first() content=div.xpath("./a/div/span/text()").extract_first()

#创建item对象,将解析到的数据存储到items对象中 item=QiubaibypagesItem() item["author"]=author item["content"]=content yield item

class QiubaibypagesPipeline(object): fp=None def open_spider(self,spider): print("开始爬虫") self.fp=open("./qiubaipages.txt","w",encoding="utf-8")

def process_item(self, item, spider): self.fp.write(item["author"]+":"+item["content"])

return item def close_spider(self,spider): self.fp.close() print("爬虫结束")

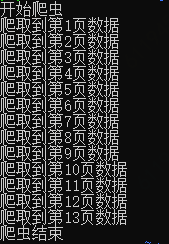

二、多页面爬取

请求的手动发送

# -*- coding: utf-8 -*- import scrapy from qiubaiByPages.items import QiubaibypagesItem

class QiubaiSpider(scrapy.Spider): name = 'qiubai' #allowed_domains = ['www.qiushibaike.com/text'] start_urls = ['https://www.qiushibaike.com/text/'] #设计通用的url模板 url = "https://www.qiushibaike.com/text/page/%d/" pageNum=1

def parse(self, response): div_list=response.xpath('//div[@id="content-left"]/div') for div in div_list: author=div.xpath("./div/a[2]/h2/text()").extract_first() content=div.xpath("./a/div/span/text()").extract_first()

#创建item对象,将解析到的数据存储到items对象中 item=QiubaibypagesItem() item["author"]=author item["content"]=content yield item #请求的手动发送 if self.pageNum<=13: print("爬取到第%d页数据" % self.pageNum) #13标示最后一页的页码 self.pageNum+=1 new_url=format(self.url % self.pageNum) #callback:将请求获取到的页面数据进行数据解析 yield scrapy.Request(url=new_url ,callback=self.parse)

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试