requests库的安装

在终端输入 pip inatsll requets 即可安装成功

若出现版本更新问题,输入python -m pip install –upgrade pip即可,若还是报错就输入python -m pip install -U pip

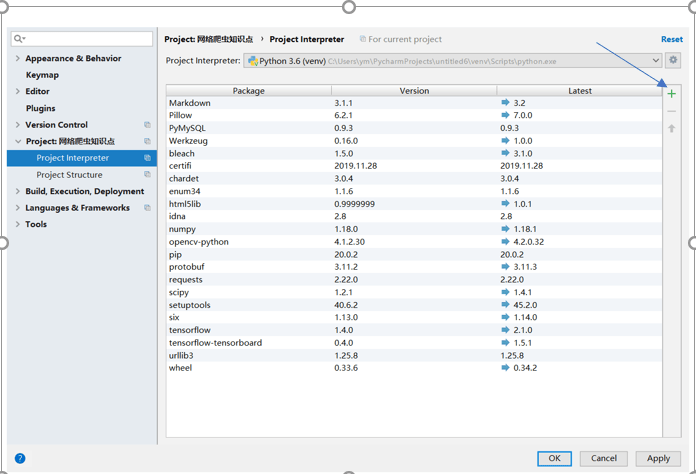

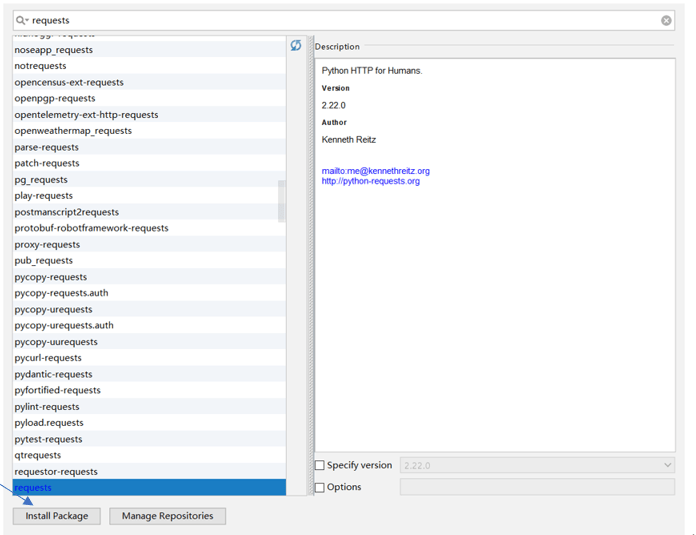

在pycharm安装requests库

点击File–>Settings–>Project Interpreter,然后点击绿色加号

然后搜索requests,找到后点击Install Package即可

网络爬虫实例

1.京东商品爬取

import requests url = "https://item.jd.com/100007934914.html" try: r = requests.get(url) r.raise_for_status() r.encoding = r.apparent_encoding#apparent_encoding该属性是从url的内容分析出编码方式 print(r.text[:1000]) except: print("Error")

2.亚马逊商品爬取

import requests url = "https://www.amazon.cn/dp/B0798J3G37/ref=s9_acsd_hps_bw_c2_x_0_i?pf_rd_m=A1U5RCOVU0NYF2&pf_rd_s=merchandised-search-2&pf_rd_r=9X1Y8MVKZSE7T4TB76N5&pf_rd_t=101&pf_rd_p=3f149c06-7221-4924-a124-ebbe0310b38c&pf_rd_i=116169071" r.status_code#503 r.request.headers#{'User-Agent': 'python-requests/2.22.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive'}

如果依然按照第一个实例的方法访问亚马逊就会出错,因为网站不允许爬虫请

import requests url = "https://www.amazon.cn/dp/B0798J3G37/ref=s9_acsd_hps_bw_c2_x_0_i?pf_rd_m=A1U5RCOVU0NYF2&pf_rd_s=merchandised-search-2&pf_rd_r=9X1Y8MVKZSE7T4TB76N5&pf_rd_t=101&pf_rd_p=3f149c06-7221-4924-a124-ebbe0310b38c&pf_rd_i=116169071" kv = {'user-agent':'Mazilla/5.0'} try: r = requests.get(url,headers = kv)#模拟网站允许的浏览器访问 r.raise_for_status() r.encoding = r.apparent_encoding print(r.text[:1000]) except: print("Error")

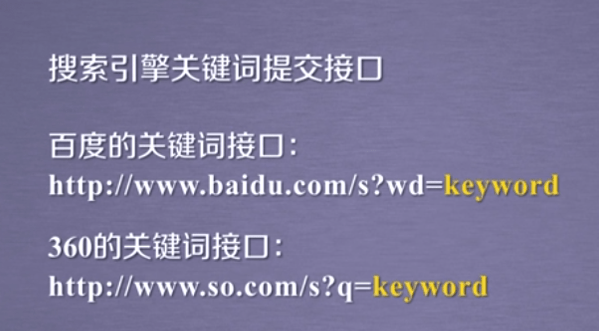

3.百度360搜索关键字

import requests url = "http://www.baidu.com/s" kv = {'wd':'网络爬虫'} try: r = requests.get(url,params = kv) ''' 通过r.request.url可知提交的url url为https://wappass.baidu.com/static/captcha/tuxing.html?&ak=c27bbc89afca0463650ac9bde68ebe06&backurl=https%3A%2F%2Fwww.baidu.com%2Fs%3Fwd%3D%25E7%25BD%2591%25E7%25BB%259C%25E7%2588%25AC%25E8%2599%25AB&logid=12247858540635199189&signature=65e8ca98898eac5d00aec9053bc119e0×tamp=1581328705 ''' r.raise_for_status() r.encoding = r.apparent_encoding print(len(r.text)) except: print("Error")

4爬取图片并保存

#爬取图片并以原图片名字保存 import requests import os url = "http://image.ngchina.com.cn/2019/0802/20190802010628808.jpg" root = "D://picture//" path = root + url.split('/')[–1]#以/分割url并将倒数第一个(也就是图片名称)根目录链接 try: if not os.path.exists(root):#如果根目录不存在就创建该目录 os.mkdir(root) if not os.path.exists(path):#如果文件不存在,则从网站获取 r = requests.get(url) r.raise_for_status() with open(path,'wb') as fp:#图片是二进制 fp.write(r.content) fp.close() print("sucess") else: print("file already exists") except: print("Error")

5.IP地址归属地的自动查询

#通过ip138网站进行ip地址查询 import requests url = "http://m.ip138.com/ip.asp?ip=" ip = '121.251.136.3' try: r = requests.get(url + ip) r.raise_for_status() r.encoding = r.apparent_encoding print(r.text[–500:]) except: print("Error")

//第一次写,写的不好的地方敬请谅解//

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试